The AI Assistant allows you to harness the power of AI to help construct and analyze simulating models and Causal Loop Diagrams (CLDs). The models and CLDs created will include the connections and feedback loops applicable to the system being represented. The analysis is based on the current model.

The AI Assistant works by allowing you to connect to different LLM based AI engines, provide prompts, get results as a Causal Loop Diagram, simulation model, or explanation. CLDs are built with specified polarities and explanations for the connections being made. Simulating models contain equations, units, and documentation, though they may also have errors. Both can be edited to refine and correct the generated content either directly or based on continued interaction with the AI engine. The AI assistant uses an open source gateway to commercially available AI engines that can take advantage of innovations in those engines while also being improved over time through research within the system dynamics community.

Select Open AI Assistant Panel from the Window Menu. The panel will open:

Note The AI Assistant is a dockable panel. See Docking, Undocking, Hiding, and Closing Panels.

AI Assistant (Prompt)

Use this to tell the assistant what you want to do. For example "Build me a model of population growth with a constrained food supply." could be used to generate a simple population model while "Explain the decrease in infections after time 30." could be used to get insights into a model of an epidemic. You can provide as much context as desired in the prompt - experiment to see what works best for your situation.

Submit

Use this to submit your query to the sd-ai Gateway and then wait for a response. The time it takes to respond to a query is variable, but anywhere from 30 seconds to several minutes is common. After submitting your query there will be an option to cancel:

If you click on the Abort button nothing will be done.

Note When you click on Submit all information you have entered into the AI Assistant along with the entire content of your model will be sent to the sd-ai Gateway and through that to other engines. The content may be retained and shared by those engines.

AI Reasoning

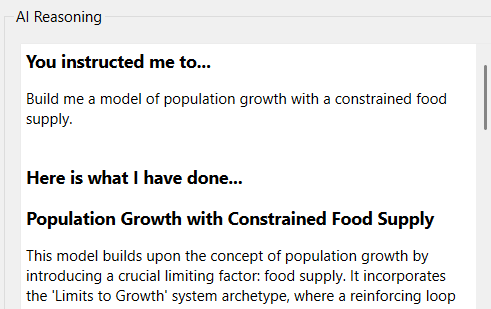

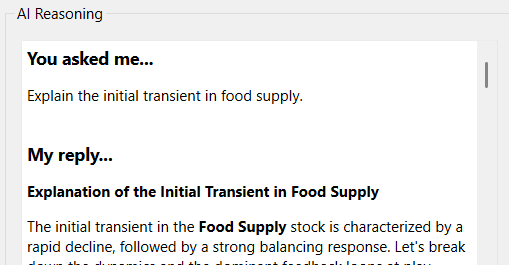

This reports back what the reasoning was for the response provided. If you are using the AI Assistant in Build mode it will explain the relationships it has created:

If you are using the AI Assistant in Discuss mode it will try to answer your prompt.

If you have multiple interactions with the AI Assistant the log for each is kept, with the newest entry at the top.

Clear Log

Each interaction with the Virtual Assistant is logged, and this log is kept when the model is saved. Use the Clear Log button to remove the log content. If you do this, the model will be marked as having its log cleared.

The majority of the additional content in the panel is provided by the sd-ai Gateway, and will be different based on both your selection of which sd-ai gateway to use and which model from that gateway to use. It may also change as developments in the sd-ai project occur. But there are some elements that will be common.

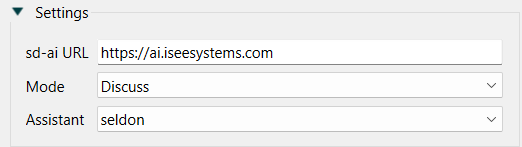

sd-ai URL is the location of the sd-ai Gateway you want to use. By default this is https://ai.iseesystems.com, but you can point it to any endpoint running the node based engine, including a port on your local computer (localhost).

Mode lets you choose between different ways to interact with the AI Assistant. Currently, the three choices are

Build which allows for the construction and refinement of models. The response will create models from scratch, or add variables and fill in their equations and units in response to the prompt. Typically you will end up with a working model that can simulate and has few or no errors in its units.

Discuss which can be used to ask questions about an existing model relating to structure or behavior. These questions can be about formulation and additional variables, but no changes will be made to model structure. Instead suggestions will be made so that you can then update the model as you see fit.

Support which can be used for more technical inquires related to an existing model currently with a focus on behavioral analysis of the model. This requires a working model in which LTM has been run.

assistant is the name of the assistant at the sd-ai gateway you want to use. If you are working with a CLD the default is simply called qualitative. If you are working with a simulation model the default is called qualitative. When using Discuss the default is called seldon, and for support ltm-narrative. These defaults are the best choices based on the current state of research into getting answers about structure and behavior from large language models.

Selecting one of the alternative, normally labeled quantitative-experimental, qualitative-experimental, or seldon-experimental, will allow you more control over endpoints and prompt engineering as described in sd-ai Gateway.

For support you will get a list of available support options:

ltm-narrative will take the Loops That MatterTM Overview results from a the current model run and generate a narrative explaining behavior in terms of dominant feedback. It will also create Loop score variables corresponding to the important loops. If LTM information is not available, you will receive an error message.

The remaining inputs are determined by the sd-ai Gateway you have connected to. The are likely to be some basic ones that are always present. These are:

Google, Open AI API, and Anthropic Keys let you specify the key to be used when working with the AI Engine. If you leave this blank, a default key will be used. The defaults key is likely to work for most queries, though it may have limitations on which of the engine's LLMs can be used. Leaving this blank will only work with the default sd-ai gateway. For any other gateway you will need to specify a key.

Problem Statement is a description of the problem you are trying to work on. This should be written in plain English. There are no specific requirements for form or content, but generally the more detailed you make this the better the response from the AI engine is likely to be.

Background Knowledge is information that will help in understanding the questions you have about relationships between concepts that the AI can help with. Pasting substantial amounts of reference material may help in guiding the response of the AI engine.

Behavioral Description is normally a table of output of the important variables in your model for a run of interest. Simply copy and paste the contents of the table. This option is not available when building a model.

Note there are no minimum or maximum lengths for any fields.

If you select any of the -experimental Assistants there will be additional fields to input information. These are used for Prompt Engineering as described below.

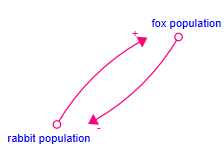

When starting with a blank CLD the first time you hit submit it will, if successful, generate a new diagram. The diagram will be drawn on the page and is fully editable as just as any diagram would be. For example the query "How do rabbit and fox population related to one another." might generate the diagram:

And provide the reasoning:

I've added the following new relationships for you...

1. rabbit population -->(+) fox population

More rabbits provide more food for foxes, supporting a larger fox population.

Polarity Reasoning

An increase in rabbit population leads to an increase in fox population due to more available food.

2. fox population -->(-) rabbit population

More foxes lead to more predation on rabbits, reducing the rabbit population.

Polarity Reasoning

An increase in fox population leads to a decrease in rabbit population due to higher predation.

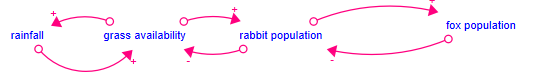

After making additions and adjustments to the diagram to this:

You could ask "How does the rabbit population impact grass availability?" This might yield something like:

Here the connection from grass to rainfall seems like it would occur on a much longer time scale than the rabbit and fox population dynamics so you might want to remove it.

Hopefully it is clear that this is not an "all at once" process. Instead the expectation is that the diagrams would be refined and adjusted till they yield something that is useful.

The background information and problem statement you create will be saved with the model you are working on so that you can reopen the model and pick up where you left off and add additional queries.

Prompt engineering is the creation of text surrounding the problem specific information that gives sufficient instructions to the AI engines to get usable results. As a research topic, this can be approached through contributions to the sd-ai Gateway. Short of that, there is an advanced option available from the Assistant dropdown that allows you to edit the prompts directly to experiment directly in Stella. If you find changes that seem to improve results you can sure your suggestions through the sd-ai project.